How to Unleash the Power of ChatGPT With Your Mendix Data

ChatGPT, the cutting-edge intelligent technology developed by OpenAI, has the potential to enhance Mendix solutions across various industries, including customer service, education, and healthcare.

ChatGPT can understand human conversations using deep learning techniques and provide meaningful responses. The real question is: How can businesses feed their Mendix data to ChatGPT to unleash its power?

The integration of ChatGPT in Mendix is incredibly straightforward. Capgemini, for example, created a connector that can shorten the process to under five minutes.

As shown in the video above, you can ask ChatGPT about their most successful product and why it is successful. However, ChatGPT doesn’t know the products, so it can’t provide valid answers. The answer lies in feeding your Mendix data to ChatGPT, which depends on how much data you want to provide.

Fine-tuning ChatGPT to your needs

The recommended approach for an enterprise-grade solution using large datasets is “model training,” also known as “fine-tuning.” However, this approach requires significant resources and labor, including GPU resources and a team of data scientists and engineers. Fine-tuning can take weeks or months, and the resulting model must be continuously evaluated and updated.

To simplify the process of providing context to ChatGPT, a more straightforward approach involves augmenting prompts and questions with relevant context data. While this method is easier to implement, it has limitations, which we will explore later.

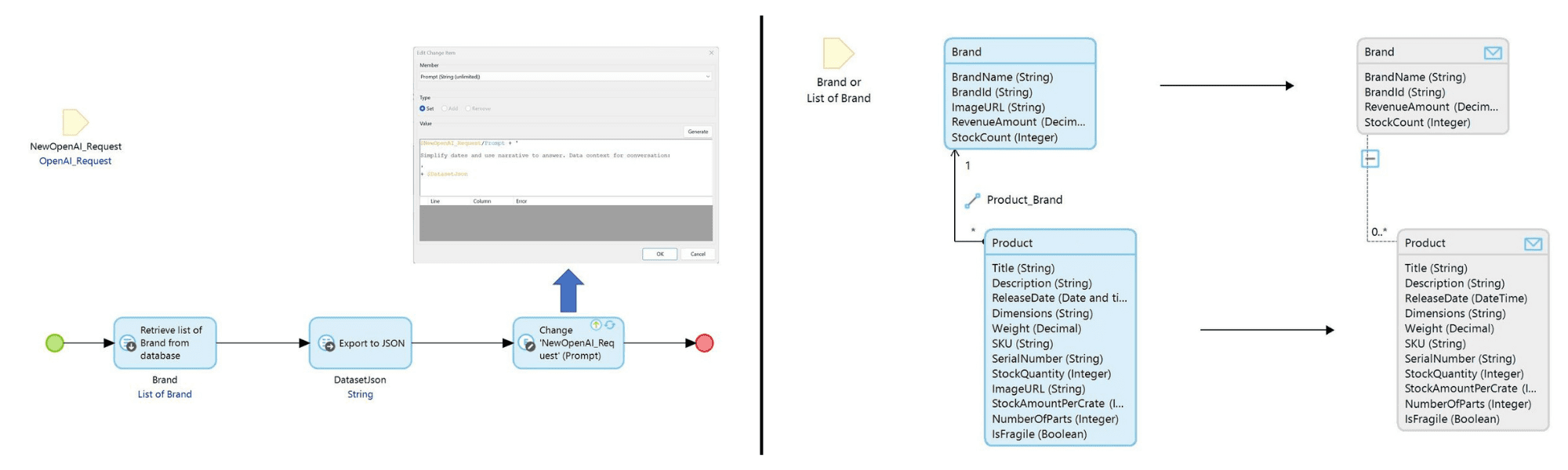

To demonstrate this approach in Mendix for the use case shown in the video, an Export Mapping was created to export brands and their related product details to a JSON string. The resulting JSON string containing the brands and products data was added to the prompt/questions as context:

Since the dataset in question was relatively small, the above worked well. However, there are several temporary limitations associated with this approach, such as:

- Context data must be provided for every question.

- Context data is limited in size.

- Privacy and security concerns, as OpenAI APIs have limited functionality in these areas.

While this technology has temporary limitations, the Microsoft Azure OpenAI API, currently available as a limited access preview, will help with most of these concerns.

Azure OpenAI runs on the same models as OpenAI and has more flexible limits, private networking, regional availability, and responsible AI content filtering. Other service cloud service providers are also working around the clock to offer similar AI capabilities.

TL;DR

Integrating ChatGPT in Mendix can be a game-changer for businesses, providing enhanced conversational capabilities to their applications. To unleash the power of ChatGPT, businesses must feed relevant data to the AI model.

The recommended approach for an enterprise-grade solution using large datasets is model training or fine-tuning, which requires significant resources and labor. A more straightforward approach involves augmenting prompts and questions with relevant context data. Still, this approach has limitations, such as the need to provide context data for every question, limited context data size, and privacy and security concerns.

Microsoft Azure OpenAI API can help alleviate most of these concerns with improved API capabilities, private networking, regional availability, and responsible AI content filtering.

- TechworldSolutions Vietnam, in collaboration with Siemens and AWS, held a Project Kick-Off Meeting for VietTinhAnhJSC.

- Best Practices for Going the Last Mile with Your Mendix Application

- Technology Trends for 2024: What’s Ahead?

- What is Microsoft Dynamics 365 Finance and Operations?

- Manage Your 3D Printing Projects with Tocndix

Bài viết cùng chủ đề:

-

Techworld Solutions Đồng Hành Cùng UTE Trong Đào Tạo Nhân Lực Chất Lượng Cao

-

Microsoft Office chính thức chuyển thành Microsoft 365

-

Epicor Asia & Techworld Solutions Vietnam Chính Thức Công Bố Quan Hệ Đối Tác Chiến Lược, Mở Rộng Hệ Sinh Thái Giải Pháp Tại Việt Nam và Khu Vực

-

Chúc mừng đội ngũ Microsoft!

-

Giá trị của Microservices Doanh Nghiệp với Low-Code

-

Sự Tiến Hóa Tiếp Theo Của Mendix Cloud: Đón Nhận Kubernetes Để Xây Dựng Nền Tảng Sẵn Sàng Cho Tương Lai

-

Optimizing Production with Epicor ERP – Specialized Solutions for Complex Industries

-

Addressing the Knowledge Gap

-

AI-Assisted Development in Action with Mendix

-

Empowering Mobile Innovation

-

How to Upgrade Legacy Systems to Compete in the Cloud Age

-

TECHWORLD SOLUTIONS VIỆT NAM VÀ ĐẠI HỌC SPKT ĐÀ NẴNG KÝ KẾT HỢP TÁC TRIỂN KHAI TRUNG TÂM NGHIÊN CỨU & ĐÀO TẠO CÔNG NGHỆ SỐ

-

How to Architect Your Mobile Customer & Employee Experiences

-

PVI Gia Định Partners with Techworld Solutions Vietnam to Revolutionize Insurance Management

-

ESEC Group Partners with Techworld Solutions Vietnam to Implement Microsoft Dynamics 365 ERP

-

Đổi mới Chuỗi cung ứng với Nền tảng Low-Code Mendix: Bước tiến vượt trội cho doanh nghiệp